Ed Zajac: Simulation of a Two-Giro Gravity Gradient Attitude Control System (1963) – the earliest 3d CGI film that I have found. It illustrates the hypothesis that only two gyro-servos would be needed to orientate a satellite in orbit.

James Cameron: Avatar 2010. Movies that rely on on about 80% CGI are commonplace in the last decade or so. Cameron’s Avatar, like Cuaron’s Gravity (2013), Jackson’s Lord of the Rings (2001-4), Verbinski’s Pirates of the Caribbean (2003), depend upon considerable CGI, and demonstrate just how far we have come from Ed Zajac’s 1963 film.

From Ed Zajac’s wireframe – the first 3d animated model – (1963) to James Cameron’s Avatar (2010) CGI is less than 50 years old. But look how far we’ve come! Of course, under all the rendered surfaces of Avatar, we’ll find the familiar wireframe and polygon models.

Overview: CGI and film

Its important to understand some of the fundamentals of CGI – how 3d models are made, how they are animated, how they made life-like or credible. So here’s my essential guide to CGI:

In MediArtInnovation I’ll be examining all the actual and likely components of the emerging total cinema, beginning with the tools that characterise what John Gaeta (vfx designer on The Matrix series) calls Virtual Cinematography. These will include motion-capture, blue-screen/green-screen live action, motion-tracking, expression-capture, crowd-simulation, character-simulation, augmented reality, projection mapping and previz software.

Overview: CGI and film

The very beginnings of CGI on film are in the 1950s, when cameras were put in front of cathode-ray-tube (CRT) screens and the images on the screen either filmed in runtime (for example, at 24 frames/sec) or shot frame-by-frame onto a customised camera or film-recorder. This is the technique John Whitney (one of the pioneers of computer-animation) used for his contribution to the titles sequence for Vertigo. John Whitney uses analog computer animation in short films and in 1958, for Saul Bass: titles sequence for Hitchcock’s Vertigo.

Saul Bass and John Whitney: Vertigo film titles 1958

http://www.youtube.com/watch?v=3YNCJ6uGg1I

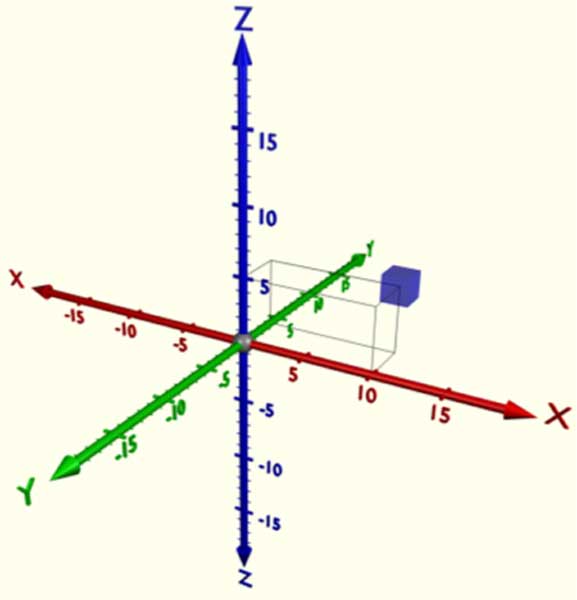

3d computer graphics uses the three-dimensional coordinates of X, Y, and Z – with usually, X as horizontal, Y as vertical and Z as depth dimensions. By including a unit scale the position and size of objects like this blue cube can be identified by its X,Y,Z coordinates.

By shuffling these coordinates (changing the numbers), objects can be repositioned, stretched, moved, distorted in any way numerically possible. Computer graphics is about coordinates and calculations.

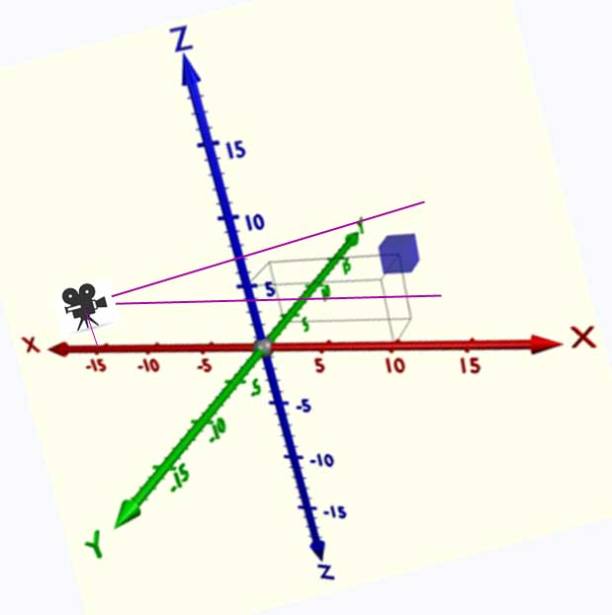

In CGI, a ‘virtual’ camera (VC) can be defined anywhere in XYZ space. Unlike a real camera, a virtual camera can have an infinitely adjustable (elastic) lens – from extreme telephoto to ultra wide-angle – to macro – just by adjusting these values in software. The virtual camera can be moved anywhere in XYZ space at whatever speed the animator desires. The virtual camera can shoot at whatever frames rates are desired – from ultra slo-mo to stop-frame, and it can shoot in stereo 3d too. The virtual camera can be set to any aperture, any depth-of-field, any filter, any colour-space. A VC is not limited by gravity or mechanics – it is infinitely flexible.

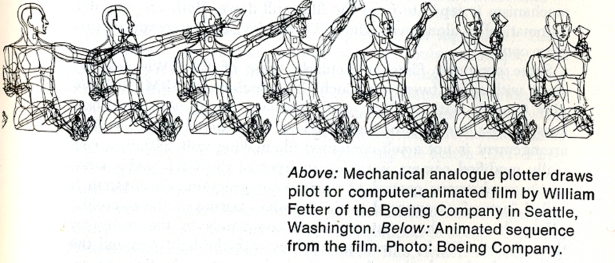

William Fetter: Boeing Man wireframe 1963 These 3d animations were used to provide ergonomic design data for the planning of airplane cockpit instrument layout. To my knowledge they weren’t committed to film.

Early computer graphics, though only simple wireframes, pushed processing power at that time to its limits. Early computers would take several seconds to compute a 3d wireframe , several minutes to compute a complex 3d model, often hours to compute a rendered image (with surface textures, point-source lighting etc) Each generation of computing power allowed further refinements to CGI – such as surface rendering, smooth-shading, texture-mapping, transparency, smoke and flame effects, cloud effects, skin-rendering, hair-rendering, fur-rendering, trees and forests, fractal landscapes, realistic humanoid and animal movement (motion-capture), and so on.

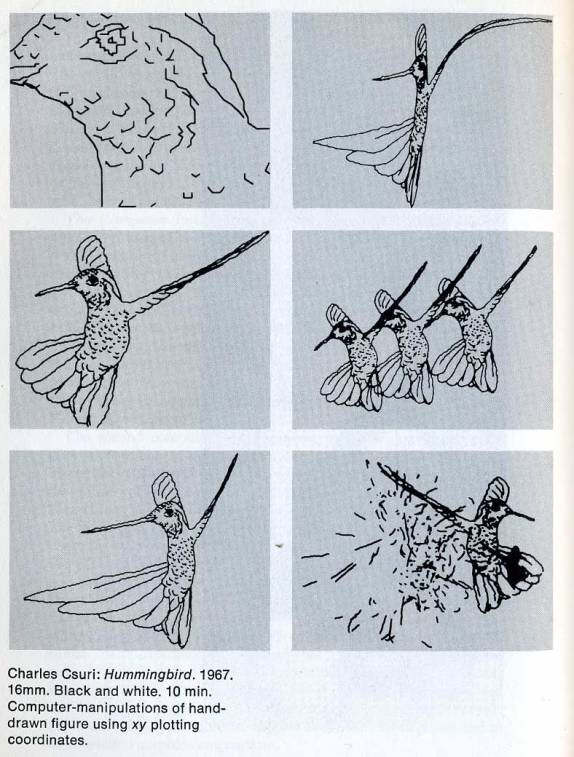

This sequence of 2d wireframes is by Charles Csuri, one of the great pioneers of CGI. He was experimenting here with hand-drawn images that have been digitised. Csuri demonstrates what can be done by algorithmically distorting the images (stretching, squeezing, copying, pasting etc). Yes – in the early days of computer graphics, all these basic operations had to be discovered and illustrated!

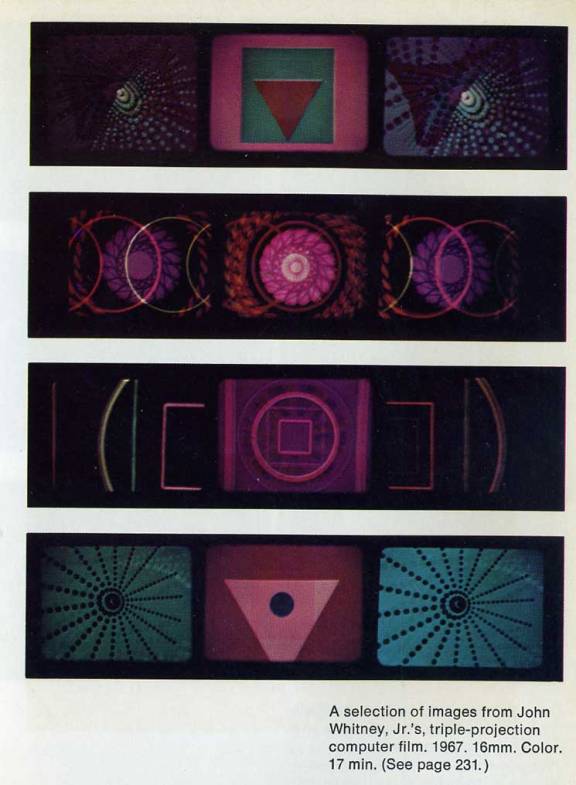

In this plate (like the others above, from Gene Youngblood: Expanded Cinema, 1970), we see samples from John Whitney’s mesmeric computer-generated films, produced using his adapted analog computer, tinted with filters, and presented as triple-image projections in 1967).

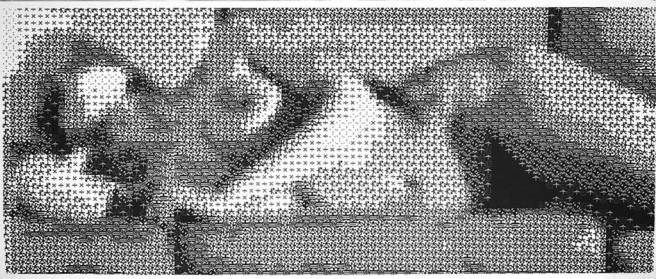

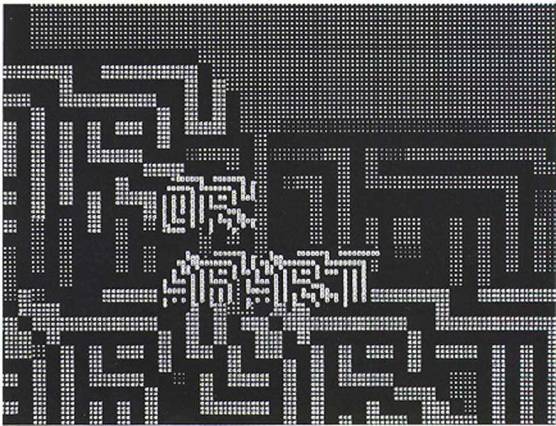

Indicative of the kind of experimental computer-imaging techniques of the mid 1960s, Ken Knowlton’s discovery that computers can be programmed to calculate the grey-scale of tonal images:

Ken Knowlton: Studies in Perception No1 1966. The realisation that the computer was a medium dawned very slowly, really by increments as computer-imaging, then computer animation, computer-poetry, computer-music (etc) emerged in the 1960s. The far-sighted Jascia Reichardt celebrated the computer as an art medium in her 1968 exhibition at London ICA – called Cybernetic Serendipity. Knowlton’s was one of the computer-images featured. This is also reproduced from Gene Youngblood’s brilliant 1970 overview: Expanded Cinema (1970).

Ken Knowlton developed the Beflix (Bell Flicks) animation system in 1963, which was used to produce dozens of artistic films by artists Stan VanDerBeek, Knowlton and Lillian Schwartz.[9] Instead of raw programming, Beflix worked using simple “graphic primitives”, like draw a line, copy a region, fill an area, zoom an area, and the like.”

Stan Vanderbeek: Poemfield 1964-1967. The radically innovative Vanderbeek partners with Ken Knowlton – a great pioneer of computer graphics – to create computer-generated typographic poetry. “Poem Field is the name of a series of 8 computer-generated animations by Stan VanDerBeek and Ken Knowlton in 1964-1967. The animations were programmed in a language called Beflix (short for “Bell Flicks”), which was developed by Knowlton.” (wikipedia)

Stan Vanderbeek + Ken Knowlton: Poemfield 1964 – 1967

Vanderbeek was very helpful to me when, as a student, he sent me a bundle of material to help me with my 1967 graduation thesis on the Gesampkunstwerk – the composite art work. He studied with the composer John Cage, the dancer Merce Cunningham and architect-philosopher Buckminster Fuller (at Black Mountain College) was a very influential film-maker, and developed his famous movie-dome studio in Stony Point, New York.

http://www.youtube.com/watch?v=BMaWOp3_G4A

Bell Labs – a leading digital research centre at that time, made this short (16 min) documentary: A Computer Technique for the Production of Animated Movies (c1964), recording some of Knowlton’s research.

Big steps in CGI came in the late 1960s, early 1970s. Wireframes could be clad with polygon surfaces, animation became more sophisticated. The earliest feature film to carry CGI images was Richard Heffron’s Futureworld (1976), in which we glimpse some of these then state of the art advances.

Richard Heffron: Futureworld, 1976. Heffron revisits the familiar territory of Michael Crichton’s Westworld (1973), where Yul Brynner plays a robotic replica of a cowboy-gunslinger. Brynner makes a cameo appearance in Futureworld too – alongside Peter Fonda. But Futureworld was the first major feature to incorporate 3d CGI – and the clip below illustrates the state of the art in the mid-1970s.

Futureworld – while not a Michael Crichton project like his WestWorld, follows a fairly predictable story trope – Westworld was a futuristic theme-park ‘world’ where robotic cowboys populate a small western town. Adventurous theme-park visitors dress in character, and can live among the cowboy robots – they can pick gun-fights with them, even shoot them. The cowboy robots only use blanks in their guns. But something goes wrong with one of the robots, and he starts shooting back at the visitors, with real bullets.

(You recognise this plot in Jurassic Park and many of Crichton’s other hugely successful movies)

Same thing with Futureworld, only this time it wasn’t by Crichton.

http://www.imdb.com/title/tt0074559/

Stephen Lisberger: Tron 1982 ERarly chromakey/blue-screen matting of live-action filmed characters into a CGI wireframe set.

The next big step came with Disney’s Tron (Stephen Lisberger, 1982). Here the plot entails our hero, played by Jeff Bridges, actually entering the cyberspace world of a computer and battling with evil virus-code villains. This is the earliest movie to feature colour-keyed live action film (heavily tinted here to give a CRT-blue colour), optically combined with a computer-generated, hand-coloured wireframe set.

Lisberger’s 1982 storyboard/visualisation of a frame for Tron. Xerox photocopy machines had a considerable impact on graphics at this time, but the marker-pen was the usual storyboard tool,

Despite its obvious shortcomings compared to CGI now, Tron was a box-office success and prepared the way for more computer animation – leading a dozen years later to John Lassiter’s Toy Story (1995) – the first completely computer generated feature.

To marry CGI with live action means that the position of objects and people in the real-world (in studio movie-sets or on location) has to be synchronised with the ‘people’ and objects in CGI space. (And of course the camera-lensing, lighting and aperture has to be synchronised too). This is where two technologies come in: motion-capture (MOCAP) and motion-control.

MOCAP is the capture of an actor’s motion and movement. It is usually done in a green-screen or blue-screen studio. Motion control is feeding data on camera position, aperture, filters etc from the real-world camera to the virtual camera, so that images from both worlds can be aligned in perfect synch.

Before Digital: Optical and Mechanical FX

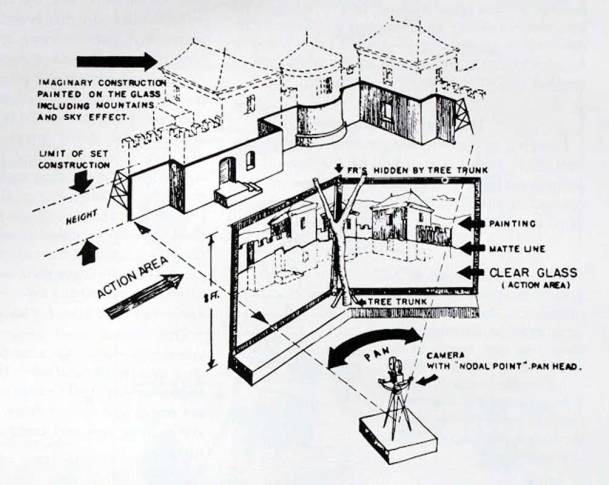

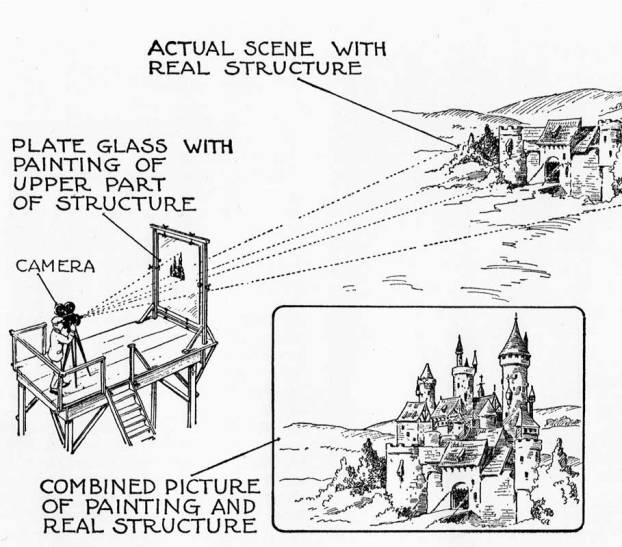

Of course, the world of virtual cinematography has grown out of, and ultimately was based upon, the pre-digital world of optical special effects (opticals or mechanicals), where production designs, directors and cinematographers built real 3d sets and used either in-camera or laboratory-processed special effects. These early effects techniques – like this example of glass-matte painting – emerged in the 1930s (it was used in Gone With the Wind, Selznick 1939), and were still being used in Steven Spielberg’s Raiders of the Lost Ark in 1982.

Optical FX was the name given to in-camera effects and laboratory (processing) effects. In-camera effects include all the ways you could manipulate the filmic image inside the camera – such as double or multiple exposures, iris-fades, rewinding film, matte-shots (where part of the lens is blocked-off by a cut-out mask or glass matte – the film is shot with the matte, then the film is re-wound and a different matte used to expose the film.

Diagram showing the set-up for shooting with glass matte paintings – the real location and the glass background painting are married together in the camera.

Another glass matte rig The compositing together was an in-camera effect – the foreground scene is shot through a matte, so that painting and live-action are composited together on the film negative.

Norman Dawn: Glass matte technique used in film 1907 According to the blogger Yang, at the excellent thespecialeffectsblog.blogspot: “Norman Dawn made several improvements on the matte shot to apply it to motion picture, and was the first director to use rear projection in cinema. Dawn combined his experience with the glass shot with the techniques of the matte shot. Up until this time, the matte shot was essentially a double-exposure: a section of the camera’s field would be blocked with a piece of cardboard to block the exposure, the film would be rewound, and the blocked part would also be shot in live action. Dawn instead used pieces of glass with sections painted black (which was more effective at absorbing light than cardboard), and transferred the film to a second, stationary camera rather than merely rewinding the film. The matte painting was then drawn to exactly match the proportion and perspective to the live action shot. The low cost and high quality of Dawn’s matte shot made it the mainstay in special effects cinema throughout the century. [2] Traditionally, matte paintings were made by artists using paints or pastels on large sheets of glass for integrating with the live-action footage.[1] The first known matte painting shot was made in 1907 by Norman Dawn (ASC), who improvised the crumbling California Missions by painting them on glass for the movie Missions of California.[2] Notable traditional matte-painting shots include Dorothy’s approach to the Emerald City in The Wizard of Oz, Charles Foster Kane’s Xanadu in Citizen Kane, and the seemingly bottomless tractor-beam set of Star Wars Episode IV: A New Hope.” http://thespecialeffectsblog.blogspot.co.uk

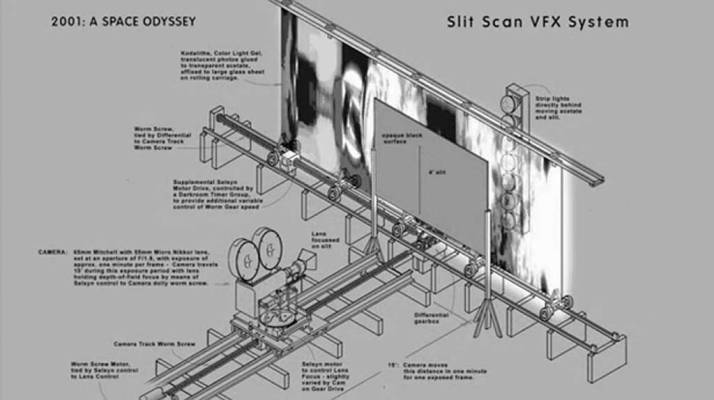

Slit-scan is an analog or manual technique in which a physical optical slit is used to progressively expose a frame of film. It is used for special fx, especially for elongating objects. It was used in the early 1960s by Bernard Lodge to create the Dr Who titles-sequence (1963), and later by the optical fx wizard Doug Trumbull for the stargate sequence in Kubrick’s 2001 a Space Odyssey (1968).

Doug Trumbull/Stanley Kubrick: Star-gate sequence from 2001 A Space Odyssey (1968) – a 9.5 minute sequence engineered using slit-scan (and some old footage from Dr Strangelove). This is the most remarkable example – outside movies that are about the effect of drugs – of the influence of counter culture experimental film-making upon major Hollywood productions.

to be continued…

Hi there! Do you use Twitter? I’d like to follow

you if that would be ok. I’m absolutely enjoying your blog and look forward to

new posts.